Preface

This document will give you all the information that you need to install, administrate and manage all of the components of QVD in a QVD solution. As an open source product, QVD is constantly growing and improving. We make every effort to maintain our documentation as complete as possible and we encourage readers to notify us of any improvement in the documentation. If you have any questions or suggestions, please send us an email to info@theqvd.com.

The document is divided into three main parts:

-

The first part discusses the core of the components that form a solution, the way they interact and the way they are installed and configured.

-

The second part deals with integration problems and the behaviour adjustments inside QVD to achieve better performance or to be more scalable.

-

The third part works to give you all the information that you may need to create and administrate the disc of the operating system, images and virtual machines that are loaded in each virtual desktop.

We also provide a bibliography of external material that helps you obtain a better comprehension of the different technologies involved in a QVD solution. We also provide a glossary with commonly used terms.

This manual is complemented by the Architecture one, and its reading is recommended to understand certain concepts.

1. What is QVD?

QVD (Quality Virtual Desktop) is a VDI (Virtual Desktop Infrastructure) solution focused on Linux. The software is designed to completely virtualize the Linux desktop, so that the client systems can connect to a central server and load their desktop environments and applications. This means that when users work from their local machine, all the programs, applications, processes and data used remain in the server and are executed centrally. Virtualization offers a series of advantages:

-

Users can change between computers in a network and continue working as if they were located in the same desktop, with complete access to all its applications and data

-

Administrators have greater control over the applications that are installed in the user’s system, and are able to administrate the user’s data more easily to make backup copies and scan for viruses, etc.

-

It is easier for administrators to provide new desktop environments and deploy applications for new users

-

Downtime is reduced in case of hardware errors

-

Users can access their desktop and applications by means of a variety of different devices, including laptops, PCs and smartphones

-

Users can work both remotely and securely with the same desktop and applications without the need for a VPN

-

Improved general system and data security

-

Reduced hardware, maintenance and administration costs

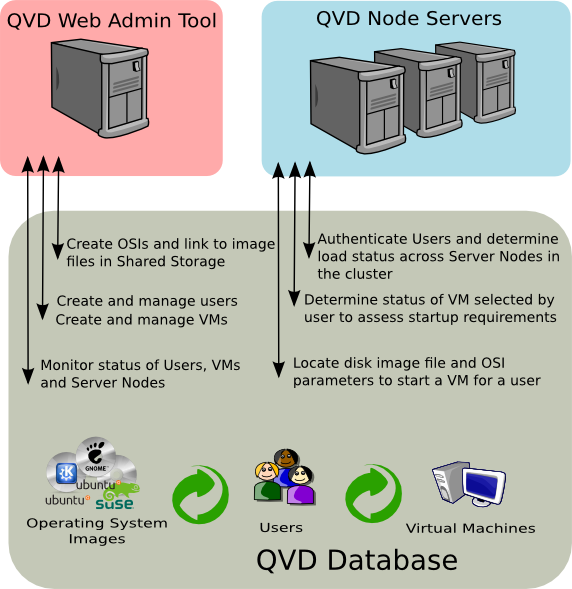

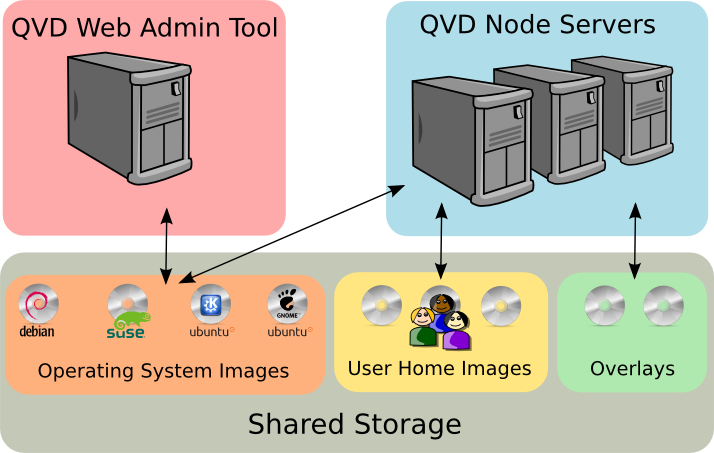

The QVD server virtualizes each Linux desktop. This can be done using one of the two virtualization technologies. The most common is that the Linux Kernel-based virtual machine (KVM) is used as a complete hypervisor type 1 (bare metal), however, since QVD 3.1, it is also possible to make use of Linux Containers (LXC) to achieve the virtualization of the Operating System. This virtualization helps to maintain each user’s environment as its own discrete entity, to improve security and stability. Virtualization allows the possibility to provide users with a variety of Operating Systems or environments, depending on their requirements. These are loaded as independent images in the QVD server.

In general, you will only load one or two images for all your users. These images provide the base operating system and the working environment which is replicated for each virtual machine. When a user connects to the server, making use of the application client, an exclusive Virtual Machine is started for that user. This also provides a"prison" which prevents any inadequate behaviour by the system from affecting other users. When the user disconnects, the virtual machine is stopped. This means that if the user’s environment has developed a problem, a disconnection can revert the environment back to its original state. This provides a higher level of security than if the user were working on an independent workstation.

In order to maintain the user’s data, such as desktop settings, documents and other user-specific information, there are two options. The first and the most common method, is to store the information in an NFS shared resource. In this way, the data can be stored in a NAS device or in a SAN, where they can be administrated easily. A second option is to load a second image in the virtual machine. This image is persistent, since it can be updated by the user, and the changes are stored for each time that the image is reloaded. Both methods are equally valid. By maintaining the user’s data separate from the core image, QVD helps to guarantee that should a core image be damaged or there be a system failure, you will be able to minimize the time needed for disaster recovery.

Access to the desktop is gained from each workstation, by means of a client that uses the NX protocol to communicate with the server and deliver the desktop and applications to the client. The NX protocol is used to manage remote X Windows connections and provides superior compression which means high performance even when the desktop is accessed via a low bandwidth connection. Moreover, QVD is able to wrap the NX protocol with SSL to protect connectivity so that users can work safety and securely, even if they access their desktops remotely. QVD provides client software to be run on a variety of operating systems and devices: Linux, Windows, OSX or even Android or Ios. This means that wherever you are, regardless of the system you have access to, you will be able to run the client application to access your desktop.

1.1. Some notes about this manual

In general, it is supposed that most of the users of QVD will take advantage of the KVM Virtualization offered in the product. As a result, the majority of this guide assumes that the product will be configured this way. If the user chooses LXC for the virtualization, there may be some differences in the configuration. In the cases where this is especially important, we have included information for both virtualization platforms. However, we have also included a separate chapter about LXC virtualization that tries to provide additional help for users who decide to explore this option. Similarly, although we offer packages for other strains of Linux like SUSE Linux Enterprise Server (SLES), we assume that most users will use Ubuntu Linux. As a result, many of the commands of this guide, together with the location of the setup files, etc., are generally provided with the supposition that you are using Ubuntu Linux. For the users of SLES, we have also tried to indicate the significant differences in configuration.

Administration of QVD

The administration of QVD can be done using one of the three following tools:

-

QVD CLI: a command line utility of that can be installed in any node and lets you manage the solution by command line and automate tasks through scripts.

-

WAT: a web-based administration tool that lets the administrator remotely access to the solution and perform a variety of administrative tasks through a standard web browser.

-

API: the API (new in QVD 4) lets you integrate the solution with third parties, since it uses a REST interface. Moreover, it is necessary in order for the other two to work, having the logic centralized.

Both the online utility of command (CLI) or the WAT require access to the API QVD and will need to be configured for this purpose. The API will have to be installed in at least one node of the solution, although it can be installed in as many as requiered. Almost all the commands that can be done through any of these tools will simply change the values for the organizations in the QVD-DB (via API). The different behaviours are executed by the different elements of the nodes of the QVD solution based on the changes done in the QVD-DB. The QVD administration tools will also be used to load new images in QVD and to configure their parameters of time and execution. To facilitate this functionality, these tools need access to the folders where they are stored and accessed by the nodes of virtualization. Generally, this access is provisioned in a shared resource of network files, like NFS.

1. Basic configuration of QVD

In general, both the architectural and administrative components of QVD require connection to the database of the product. This implies that these components need a configuration file with the connection data to the same. This is also the only configuration that is required regarding files. The rest of configurations of the solution are in the database and is made directly through the pertinent administrative tools.

The configuration of the HDK is inside the file /etc/qvd/node.conf. This access path is automatically created if you choose to install QVD via the packets that we provide. If you choose another method, you should create it manually, and you can use the configuration template provided:

root@myserver:~# cp -R /usr/share/qvd/config /etc/qvd

The file node.conf must contain at least the following:

#

# QVD Node Configuration

#

nodename = mycomputer

# Database connection information.

# database.host: where the QVD database is found

# database.name: the name of the QVD database

# database.user: the user account needed to connect

# database.password: the password needed to connect

database.host = mycomputer

database.name = qvddb

database.user = qvd

database.password = passw0rd

path.log = /var/log/qvd

log.filename = ${path.log}/qvd.log

log.level = INFO

You must ensure that the nodename, database.host, database.name, database.user and database.password contain values that coincide with the ones that you have configured. Once these settings are in place, any utility that requires access to the database will have the correct configuration to do so.

The entries related to the log must be established here because the relevant QVD components are started before connecting to the database.

The configuration of the API component is in the file /etc/qvd/api.conf. The configuration is identical to node.config and you can start from this file to do it. You only require two extra parameters:

api.user = qvd api.group = qvd

These parameters define the permissions with which the API is executed. The ones that are shown here are only an example. For security reasons, and in line with the general recommendation, do not use the user root for this purpose.

From the version 4.0 of QVD, the components WAT and CLI no longer require a connection to the database, but to the API. They now therefore have their own configuration files. For the CLI /etc/qvd/qa.conf:

qa.url = https://api.yourqvdserver.com:443/ qa.tenant = * qa.login = superadmin qa.password = superadmin qa.format = TABLE qa.insecure = 0 qa.ca = /etc/qvd/certs/ca.conf

For the WAT /usr/lib/qvd/lib/wat/config.json:

{

"apiUrl": "https://api.yourqvdserver.com:443"

}

In any case, you should configure all these files according to your own requirements.

1.1. Other parameters of configuration of QVD

Outside the configuration file, QVD stores most of its configuration in the QVD-DB. There is a wide range of parameters that are applied to different components inside the infrastructure QVD. These parameters can be configured using the QVD CLI administration utility. We discuss the appropriate steps for this in the chapter titled QVD CLI administration utility. It is also possible to change them via the WAT from version 4.0. This is explained in its own manual.

Although it is possible to establish any of the following configuration parameters inside the file node.config, the configuration inside the QVD-DB will always take precedence. This means that if a change in the settings contained in the database is made, the settings stored in the configuration file become obsolete and this is confusing for future administrative tasks. So, we strongly recommend that these options are only updated inside the database using the QVD CLI administration utility.

This section describes some of these additional configuration parameters. Although there are many other settings that can be seen using the QVD CLI, some of them (such as the parameters that start with "intern") must never be modified without the help of a QVD support engineer of . In general, we do not recommend that you change any of these configuration parameters without the supervision of the QVD support group. In fact, in the WAT these parameters do not appear.

Take into account that to establish these parameters, you should have installed and configured QVD-DB.

|

|

Some configuration parameters can be related to the component parameters of the system. In these cases, it is possible that you should update the parameter in more than one place. A typical example would be the setting of the l7r.port which would affect the configuration client.host.port . |

|

|

We insist that the intern parameters are for internal use of the product and the administrator is not expected to modify them. They will be subjected to changes in any launches of the software and they are designed to help the developers debug the behaviour inside the product. |

1.1.1. Paths of the QVD system

The following options are available to change the paths that QVD uses to look for applications, certificates and other specific data of QVD.

path.run = /var/run/qvd

path.log = /var/log

path.tmp = /var/tmp

path.storage.root = /var/lib/qvd/storage

path.storage.staging = ${path.storage.root}/staging

path.storage.images = ${path.storage.root}/images

path.storage.overlays = ${path.storage.root}/overlays

path.storage.homes = ${path.storage.root}/homes

path.ssl.certs = ${path.run}/ssl

path.ssl.ca.system = /etc/ssl/certs

path.ssl.ca.personal = .qvd/certs

path.cgroup = /sys/fs/cgroup

path.cgroup.cpu.lxc = /sys/fs/cgroup/cpu/lxc

path.serial.captures = ${path.tmp}/qvd

command.kvm = kvm

command.kvm-img = kvm-img

command.nxagent = /usr/bin/nxagent

command.nxdiag = /usr/bin/nxdiag.pl

command.x-session = /etc/X11/Xsession

command.useradd = /usr/sbin/useradd

command.userdel = /usr/sbin/userdel

The above values are those set by default.

-

* path.run *: access path (it is usually referenced by other access path options)

-

* path.log *: base access path to store logs

-

* path.tmp *: the path to store temporary files

-

* path.storage.root *: the base path for the main area of storage used by QVD

-

* path.storage.staging *: this directory is used to temporarily store DIs. From version 4.0, it can also be used as a multitenant image library.

-

* path.storage.images *: the image directory used to store registered DIs

-

* path.storage.overlays *: the overlay directory used to maintain the qcow images superimposed

-

* path.storage.homes *: the directory where the images of users' starting directories are stored when qcow is used

-

* path.ssl.certs *: the path to store SSL certificates used by QVD

-

* path.ssl.ca.system *: the path where the system CA certificates are stored

-

* path.ssl.ca.personal *: the path where the local or personal CA certificates are stored

-

* path.serial.captures *: the location used to store serial captures (if enabled)

-

* command.kvm *: the command to execute KVM

-

* command.kvm-img *: the command used to work with QEMU virtual disks within KVM

-

* command.nxagent *: the access path to nxagent binary (it is usually only used by the VMA in an OSF)

-

* command.nxdiag *: the access path to thenxdiag.pl script used by the VMA to make sure that nxagent is executed correctly

-

* Command.x-session *: the access path to the XSession shell script executed by the system when an X Windows session is started

-

* Command.useradd *: the access path to the useradd script used by the system to add users

-

* Command.userdel *: the access path to the userdel script used by the system to delete users

1.1.2. Logging

The following options can be used to change the path to the log file and to control the output of the logging level.

path.log = /var/log

log.filename = ${path.log}/qvd.log

log.level = INFO

The values above are the default values.

-

* path.log *: base path of the log files

-

* log.filename *: access path to the log file

-

* log.level *: the output of the logging level, the values can be: ALL, DEBUG, INFO, WARN, ERROR, FATAL, OFF

These options must be configured in the QVD configuration file /etc/qvd/node.conf because the logging system is started before the connection to the database. If these values are established in the database, they will be ignored.

QVD generates its log through Log::Log4perl, a standard perl module that offers many possibilities regarding methods of logging. You can choose to send the output to syslog, to a file or even to a database. To send the log to syslog, the following configuration settings can be established in node.conf:

log4perl.appender.SYSLOG = Log::Dispatch::Syslog log4perl.appender.SYSLOG.layout = Log::Log4perl::Layout::PatternLayout log4perl.appender.SYSLOG.layout.ConversionPattern = %d %P %F %L %c - %m%n log4perl.rootLogger = DEBUG, SYSLOG log.level = DEBUG

To obtain a complete breakdown of the different logging options available in Log4perl, consult the documentation found at: log4perl.

If you choose to save your logs in a file, make sure to use a suitable method of handling growing log file size.

1.1.3. Options of configuration of the L7R

You can specify the following additional options to control the L7R:

l7r.as_user = root

l7r.use_ssl = 1

l7r.port = 8443

l7r.address = *

l7r.pid_file = ${path.run}/l7r.pid

l7r.auth.plugins = default

l7r.loadbalancer.plugin = default

l7r.loadbalancer.plugin.default.weight.ram = 1

l7r.loadbalancer.plugin.default.weight.cpu = 1

l7r.loadbalancer.plugin.default.weight.random = 1

The values that appear above are those set by default.

-

* l7r.as_user *: the user that must be used to execute the QVD L7R process

-

* l7r.use_ssl *: use SSL or not to encode the client connections

-

* l7r.port *: the port that must be listened to for the client’s connections with the L7R (the configuration client.host.port for each client should also be configured for this value)

-

* l7r.address *: the IP address to which the L7R must connect

-

* l7r.pid_file *: the path to the PID file that is created when the process L7R is being executed

-

* l7r.auth.plugins *: it can be used to provide additional authentication complements like OpenSSO

-

* l7r.loadbalancer.plugin *: it can be used to include an alternative load balancing algorithm plugin

-

* l7r.loadbalancer.plugin.default.weight.ram *: assigned weight to RAM resources for the default load balancing algorithm

-

* l7r.loadbalancer.plugin.default.weight.cpu *: assigned weight to the CPU resources for the default load balancing algorithm

-

* l7r.loadbalancer.plugin.default.weight.random *: assigned weight to the randomizer for the default load balancing algorithm

1.1.4. Options of configuration of the HKD

You can specify the following additional options to control the HKD:

hkd.vm.starting.max = 6

The value set in the example above is the predetermined value. * * hkd.vm.starting.max *: the maximum number of virtual machines that the HKD will allow concurrently in "starting" mode before launching a new instance in a server node.

1.1.5. Options de VM

There are some options that be configured to control the behaviour of the virtual machine inside QVD:

vm.overlay.persistent = 0 vm.kvm.virtio = 1 vm.network.ip.start = vm.network.netmask = vm.network.gateway = vm.network.bridge = vm.network.dns_server =

Take into account the if you use the CLI Legacy administration utility you may also need these parameters:

vm.vnc.redirect = 0 vm.vnc.opts = vm.serial.redirect = 1 vm.serial.capture = 0

The values shown above are the values set by default.

-

* vm.overlay.persistent *: if you choose to make use of the persistent overlays for temporary files and registry files. Take into account that this persistence will not be extended between the nodes if the overlay is stored locally, for example in a btrfs configuration

-

* vm.kvm.virtio *: the virtio controller will be used for the network connection (the OSF that is being executed in the image must support the virtio controller)

-

* vm.vnc.redirect *: enables the integrated VNC server when using KVM virtualization. (Useful to solve problems of an image)

-

* vm.vnc.opts *: additional configuration for the KVM integrated VNC server

-

* vm.serial.redirect *: enables the console of the serial port when using KVM virtualization

-

* vm.serial.capture *: captures the output of the serial console in the file

-

* vm.network.ip.start *: the starting IP address for the range assigned to the virtual machines in the network reserved for QVD

-

* vm.network.netmask *: CIDR network mask for the size of the network reserved for QVD

-

* vm.network.gateway *: IP of the firewall in the network reserved for QVD that will be transmitted by DHCP to the virtual machines

-

* vm.network.bridge *: Name of the interface in network bridge mode

-

* vm.network.dns_server *: IP of the DNS service that will serve the virtual machines by DHCP in the network reserved for QVD

Be aware that the configuration of vm.network is generally required for the QVD nodes to work correctly.

2. QVD-DB

The QVD-DB is the glue that joins all the QVD components together. It makes use of an underlying DBMS of PostgreSQL version 9.3 or later (from QVD4.1).

All the configuration information and execution times of all the QVD structure is stored in the database and if it fails, the whole platform will stop working. For this reason, it is highly recommended that the database is installed in high availability mode. You can find outhow to configure PostgreSQL in a HA Setting in

and

The real hardware requirements for QVD-DB are very modest and any server with just two CPU cores and 2 GB of RAM will be able to load the database.

|

|

QVD only works with PostgreSQL 9.3 or later, since its notification functions are used. |

2.1. Installation and configuration of QVD-DB

In the system where you are going to install QVD-DB, you will have to add the QVD repository to its fonts apt.

Firstly, add the public key of the QVD parcels to your trusted keys (like root):

# wget -qO - https://www.theqvd.com/packages/key/public.key | sudo apt-key add -

Now, add the repository:

/etc/apt/sources.list.d/qvd.list # apt-get update

For commercial packages:

# echo "deb http://$USER:$PASSWORD@theqvd.com/commercial-packages/ubuntu/xenial QVD-4.1.0 main" > \ /etc/apt/sources.list.d/qvd.list # apt-get update

|

|

$USER and $PASSWORD are the credentials received when the suscription is purchased. |

The recommended way to install the central database is with the packet perl-qvd-db. You will also need the client software of PostgreSQL to execute these steps (check the PostgreSQL documentation ). All these steps require root privileges:

# apt-get install perl-qvd-db.

For SLES:

Firstly, add the public key of the QVD packages to your trusted keys (as root):

# rpm --import https://www.theqvd.com/packages/key/public.key

Now, add the repository:

# zypper ar http://theqvd.com/packages/sles/12SP1/QVD-4.1.0 QVD # zypper ref

For commercial packages:

# zypper ar http://theqvd.com/commercial-packages/sles/12SP1/QVD-4.1.0 QVD User Name: $USER Password: $PASSWORD # zypper ref

|

|

$USER and $PASSWORD are the credentials received when the suscription is purchased. |

Use zypper to install the database:

# zypper install perl-QVD-DB

For Centos:

Firstly, add the public key of the QVD packages to your trusted keys (as root):

# rpm --import https://www.theqvd.com/packages/key/public.key

Now, add the repository:

# yum-config-manager --add-repo http://theqvd.com/packages/centos/7.2/QVD-4.1.0/

For commercial packages:

# echo "[QVD-4.1.0]" > /etc/yum.repos.d/QVD-4.1.0.repo # echo "name=QVD-4.1.0" >> /etc/yum.repos.d/QVD-4.1.0.repo # echo "baseurl=http://$USER:$PASSWORD@theqvd.com/commercial-packages/centos/7.2/QVD-4.1.0/" | sed 's/@\(.*@\)/%40\1/' >> /etc/yum.repos.d/QVD-4.1.0.repo # echo "enabled=1" >> /etc/yum.repos.d/QVD-4.1.0.repo

|

|

$USER and $PASSWORD are the credentials received when the suscription is purchased. |

Use yum to install the database:

# yum install perl-QVD-DB

2.1.1. Creation of the user and the QVD database

You will need to create a user inside PostgreSQL to access the QVD database, and you will need to create the real database where QVD can configure its tables and store its data. To do this, you will need to use the sudo command to switch to the postgres account:

$ sudo su - postgres

As a postgres user, you can create PostgreSQL user accounts with the command createuser. It will ask for a password for the new user and some details about the user’s account. In general, you can answer n to all the options that appear. For example, to create a user called qvd, we would use the following command.

postgres@myserver:~$ createuser -SDRP qvd Enter password for new role: passw0rd Enter it again: passw0rd

|

|

For more information about this command, use the PostgreSQL documentation: http://www.postgresql.org/docs/9.3/static/app-createuser.html |

The new user can be now assigned as owner of a database. To create a database for QVD and assign the owner, use the command createdb. Use the parameter -O to establish the owner of the database to the account that you want to use. In this case, we establish the owner for the new user that we created in the previous step.

postgres@myserver:~$ createdb -O qvd qvddb

|

|

For more information about this command, use the PostgreSQL documentation: http://www.postgresql.org/docs/9.3/static/app-createdb.html |

2.1.2. Configuration requirements of PostgreSQL

With the objective of allowing concurrent access from all the nodes of the QVD farm and manage the transactions in a coherent way, the transaction isolation level must be changed from read committed to serializable. This is a very important step that must not be omitted or its database could become incoherent and QVD may stop working.

It is also necessary to allow the database to have network access. By default, it is configured to only listen to the queries in localhost. This must be changed to listen in all the interfaces.

To do this, you must edit the PostgreSQL configuration filespostgresql.conf y pg_hba.conf. In Ubuntu they are found in /etc/postgresql/9.3/main. In SUSE , you will find these files in /var/lib/pgsql/data.

The transaction isolation level is controlled through the setting default_transaction_isolation. To enable general network access to PostgreSQL, change the setting listen_addresses from localhost to *.

root@myserver:~# cd /etc/postgresql/9.3/main #this would be /var/lib/pgsql/data on SLES root@myserver:/etc/postgresql/9.3/main# vi postgresql.conf listen_addresses = '*' default_transaction_isolation = 'serializable'

To enable network access for the qvd user, add the following line to pg_hba.conf (with the following format: host database user CIDR-address auth-method [auth-options]).

root@myserver:/etc/postgresql/9.3/main# vi pg_hba.conf host qvddb qvd 192.168.0.0/24 md5

|

|

Make sure that you replace the default network192.168.0.0/24 for the network that your QVD platform uses. |

Restart PostgreSQL for all the changes to take effect.

For Ubuntu:

# service postgresql restart

and for SLES:

# /etc/init.d/postrgresql restart

2.2. Supply of QVD-DB

The packet QVD-DB includes a script that will help to populate the QVD database with all the tables that are necessary for QVD to work correctly. For this script to work, it is necessary that all the settings of the QVD database have been correctly introduced in the file /etc/qvd/node.conf. To deploy the database, execute qvd-deploy-db.pl (located in the folder /usr/lib/qvd/bin/. Add this directory to your system paths if you are going to be administrating a QVD solution).

# qvd-deploy-db.pl

Once you have executed this command, QVD-DB will be ready to be used by any component inside the QVD environment.

2.3. Access test to QVD-DB

You must check that you can access the database from all the nodes that require it (that is, all the nodes that have the HKD component or the API component installed). The simplest way to verify this is by connecting to the database from them and list the tables used by QVD. To do this, you must make sure that you have the PostgreSQL client installed in the node from which it is connecting. You can install it by means of the packet postgresql-client in Ubuntu and postgresql in SLES:

In Ubuntu:

# sudo apt-get install postgresql-client

In SLES:

# zypper install postgresql

To list the tables in the QVD database using the PostgreSQL client, you can do the following:

anyuser@otherserver:~$ psql -U qvd -W -h myserver qvddb

Password for user qvd:

psql (9.3)

qvddb=> \d

List of relations

Schema | Name | Type | Owner

--------+----------------------------------------------+----------+-------

public | acl_role_relations | table | qvd

public | acl_role_relations_id_seq | sequence | qvd

public | acls | table | qvd

public | acls_id_seq | sequence | qvd

public | administrators | table | qvd

public | administrators_id_seq | sequence | qvd

public | all_acl_role_relations | view | qvd

public | all_role_role_relations | view | qvd

public | configs | table | qvd

public | di_properties | table | qvd

public | di_tags | table | qvd

public | di_tags_id_seq | sequence | qvd

public | dis | table | qvd

public | dis_id_seq | sequence | qvd

public | host_cmds | table | qvd

public | host_counters | table | qvd

public | host_properties | table | qvd

public | host_runtimes | table | qvd

public | host_states | table | qvd

public | hosts | table | qvd

public | hosts_id_seq | sequence | qvd

public | log | table | qvd

public | log_id_seq | sequence | qvd

public | operative_views_in_administrators | view | qvd

public | operative_views_in_tenants | view | qvd

public | osf_properties | table | qvd

public | osfs | table | qvd

public | osfs_id_seq | sequence | qvd

public | properties_list | table | qvd

public | properties_list_id_seq | sequence | qvd

public | qvd_object_properties_list | table | qvd

public | qvd_object_properties_list_id_seq

| sequence | qvd

public | role_administrator_relations | table | qvd

public | role_administrator_relations_id_seq | sequence | qvd

public | role_role_relations | table | qvd

public | role_role_relations_id_seq | sequence | qvd

public | roles | table | qvd

public | roles_id_seq | sequence | qvd

public | session | table | qvd

public | ssl_configs | table | qvd

public | tenants | table | qvd

public | tenants_id_seq | sequence | qvd

public | user_cmds | table | qvd

public | user_properties | table | qvd

public | user_states | table | qvd

public | users | table | qvd

public | users_id_seq | sequence | qvd

public | versions | table | qvd

public | views_setups_attributes_administrator | table | qvd

public | views_setups_attributes_administrator_id_seq | sequence | qvd

public | views_setups_attributes_tenant | table | qvd

public | views_setups_attributes_tenant_id_seq | sequence | qvd

public | views_setups_properties_administrator | table | qvd

public | views_setups_properties_administrator_id_seq | sequence | qvd

public | views_setups_properties_tenant | table | qvd

public | views_setups_properties_tenant_id_seq | sequence | qvd

public | vm_cmds | table | qvd

public | vm_counters | table | qvd

public | vm_properties | table | qvd

public | vm_runtimes | table | qvd

public | vm_states | table | qvd

public | vms | table | qvd

public | vms_id_seq | sequence | qvd

public | wat_log | table | qvd

public | wat_log_id_seq | sequence | qvd

public | wat_setups_by_administrators | table | qvd

public | wat_setups_by_administrators_id_seq | sequence | qvd

public | wat_setups_by_tenants | table | qvd

public | wat_setups_by_tenants_id_seq | sequence | qvd

(69 rows)

qvddb=> \q

2.4. Backup copy and restoring QVD-DB

A very simple backup copy technique would be to dump the entire PostgreSQL database to a file:

# pg_dump -U postgres postgres > yourfile.backup

To revert the database so that it coincides with a backup copy file, you can execute the following command:

# psql -U postgres postgres < yourfile.backup

|

|

For advanced operations, see http://www.postgresql.org/docs/9.3/static/backup.html |

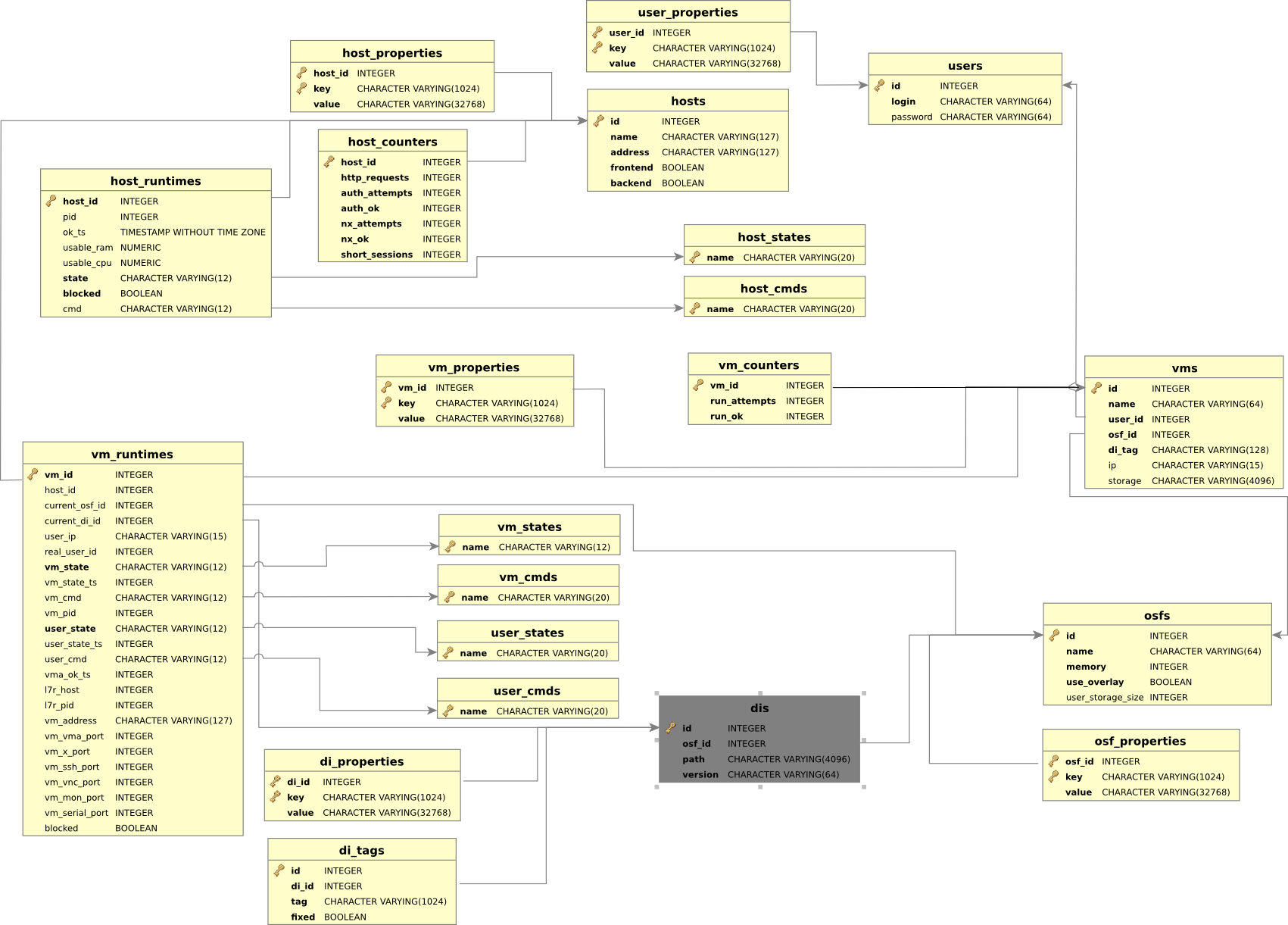

2.5. Relational model of QVD-DB data

The following diagram shows the general model of QVD-DB data.

|

|

It is recommended that Administrators do not try to modify directly in the database, since it is very likely that the QVD installation will stop working. |

3. QVD server nodes

The QVD server nodes are the engine of the infrastructure QVD. The nodes execute only one binary component, the HKD or House Keeping Daemon which keeps track of the status of the virtual machines. The HKD is also responsible for starting and stopping the virtual machines. It also supervises the status of each virtual machine and updates the status information inside the QVD database, so other nodes and administration tools can work accordingly. In general, the HKD is responsible for administrating the status of the virtual machines.

The HKD also invokes the L7R (Level 7 Router) intermediary between the client and the server, responsible for authenticating users, establishing sessions and routing the user to his or her virtual machine when they connect. In general, the L7R is responsible for administrating the status of the user.

The common installation of the nodes is in a cluster or farm. This means that inside a typical deployment there are likely to be any number of server nodes.

To be familiar with the general architecture of a server node check the Architecture Manual of QVD.

3.1. Installation of a QVD server node

In any of the systems in which you are going to install the components of QVD server node, you will have to add the QVD repository to your apt fonts.

Firstly, add the public key of the QVD parcels to your trusted keys (like root):

# wget -qO - https://www.theqvd.com/packages/key/public.key | sudo apt-key add -

Now, add the repository:

/etc/apt/sources.list.d/qvd.list # apt-get update

For commercial packages:

# echo "deb http://$USER:$PASSWORD@theqvd.com/commercial-packages/ubuntu/xenial QVD-4.1.0 main" > \ /etc/apt/sources.list.d/qvd.list # apt-get update

|

|

$USER and $PASSWORD are the credentials received when the suscription is purchased. |

To install all the components of a QVD server node and its dependencies, execute the following command:

# apt-get install perl-qvd-node

For SLES:

Firstly, add the public key of the QVD packages to your trusted keys (as root):

# rpm --import https://www.theqvd.com/packages/key/public.key

Now, add the repository:

# zypper ar http://theqvd.com/packages/sles/12SP1/QVD-4.1.0 QVD # zypper ref

For commercial packages:

# zypper ar http://theqvd.com/commercial-packages/sles/12SP1/QVD-4.1.0 QVD User Name: $USER Password: $PASSWORD # zypper ref

|

|

$USER and $PASSWORD are the credentials received when the suscription is purchased. |

To install all the components of QVD Server node in SLES, execute the following command:

# zypper install perl-QVD-HKD perl-QVD-L7R

For Centos:

Firstly, add the public key of the QVD packages to your trusted keys (as root):

# rpm --import https://www.theqvd.com/packages/key/public.key

Now, add the repository:

# yum-config-manager --add-repo http://theqvd.com/packages/centos/7.2/QVD-4.1.0/

For commercial packages:

# echo "[QVD-4.1.0]" > /etc/yum.repos.d/QVD-4.1.0.repo # echo "name=QVD-4.1.0" >> /etc/yum.repos.d/QVD-4.1.0.repo # echo "baseurl=http://$USER:$PASSWORD@theqvd.com/commercial-packages/centos/7.2/QVD-4.1.0/" | sed 's/@\(.*@\)/%40\1/' >> /etc/yum.repos.d/QVD-4.1.0.repo # echo "enabled=1" >> /etc/yum.repos.d/QVD-4.1.0.repo

|

|

$USER and $PASSWORD are the credentials received when the suscription is purchased. |

To install all the components of QVD Server node in Centos, execute the following command:

# yum install perl-QVD-HKD perl-QVD-L7R

This will install all the components of the qvd node together with any dependency. In general, we recommend that the QVD CLI administration utility be installed in all the QVD Server nodes, since it is common to work directly from these systems and lets you configure QVD quickly. More information about this utility in Utility of Administration CLI of QVD.

3.2. Basic configuration

As with the majority of the other infrastructure components of QVD, each QVD server node requires access to the QVD database. You must make sure that the QVD server node configuration file is written correctly so that the QVD server node functions correctly. You can see how to do this in the chapter Basic Configuration of QVD

Unlike the majority of the other components, each QVD server node requires an additional entry in the QVD base configuration file in order to be able to search quickly inside the QVD-DB. This is a single line entry that must be annexed to theconfiguration and contains the nodename that must coincide with the name you assigned to its node when you registered it in the QVD-DB, whether using the WAT or the QVD CLI administration utility. In general, we recommend you name your nodes using the host name of the system in which they are being executed.

This can be done quickly by doing the following:

echo "nodename=`hostname`" >> /etc/qvd/node.conf

3.3. Network requirements

The QVD server nodes make use of a network bridge and of virtual network interfaces to provide network interfaces to each of the virtual machines that are executed in the node. In order to provide IP addresses to virtual machines, QVD also executes a DHCP server that will assign the IP addresses within the the virtual network range to the virtual hosts as they are started. So, it is very important to choose a network range that is unlikely to conflict with any of their other existing infrastructures for this purpose.

|

|

Services that are executed in systems of the same IP network can be affected by QVD or any of the virtual machines that are executed in QVD. |

There is a series of configuration steps that it may be necessary to perform manually to correctly configure the network of a QVD server node. There are often other ways to obtain a suitable network configuration, so we only provide them as guidelines.

3.3.1. Establish dnsmasq to be controlled by QVD

QVD uses dnsmasq as a DHCP and DNS server for the virtual machines that are executed in a node. To work correctly, dnsmasq needs to be executed by the HKD process.

Firstly, check that dnsmasq is installed. In Ubuntu, execute the following commands and check the status:

# dpkg -s dnsmasq

In SUSE:

# rpm -q dnsmasq

If it is not installed, do it now using your package manager, whether apt-get install dnsmasq, or zypper install dnsmasq.

By default, the Ubuntu package starts the process that is executed as a daemon in the background, so you should avoid it starting automatically. This is done with the following commands in Ubuntu:

# service dnsmasq stop # sed -i s/ENABLED=1/ENABLED=0/ /etc/default/dnsmasq

In SLES, dnsmasq is managed under the command chkconfig and it is disabled by default, so you should not need to do anything. However, should dnsmasq have been enabled or simply to make sure, you can check that it is disabled executing the following command as root:

# chkconfig dnsmasq off

|

|

This step is essential for QVD to work using KVMvirtualization. For LXC it is possible to specify if it must use DHCP or not to configure the network in its virtual machines. |

3.3.2. Configure the IP resend

IP Forwarding is necessary to route the clients to the correct location. You can habilitate it quickly by executing the following command.

# echo 1 > /proc/sys/net/ipv4/ip_forward

Unfortunately, on restarting the host system, this change will be lost. To make it permanent, you can edit /etc/sysctl.conf and uncomment the line:

net.ipv4.ip_forward=1

You can force sysctl to reload its configuration after having edited this file executing:

# sysctl -p

3.3.3. Configuring a network bridge

There are several ways to configure the network bridge and the appropriate routing to make sure that a QVD client is routed to the correct virtual machine.

The easiest method is to configure the static network interface and a set of iptables routing rules to perform the necessary NAT to translate the IP addresses between its real and virtual network.

To configure its network in Ubuntu, edit the file /etc/network/interfaces and add the following lines:

auto qvdnet0 iface qvdnet0 inet static pre-up brctl addbr qvdnet0 pre-up iptables -t nat -A POSTROUTING -o eth0 -j SNAT --to-source 192.168.0.2 pre-up iptables -t nat -A PREROUTING -d 192.168.0.2 -p tcp --dport 8443 -j DNAT --to-destination 10.3.15.1 post-down brctl delbr qvdnet0 address 10.3.15.1 netmask 255.255.255.0

It is important to point out that in the previous example you will need to change the IP address 192.168.0.2 to the network interface IP address to which you wish its clients to connect. In the example above we use the range 10.3.15.0/24 for the virtual network used by QVD. This range must be unique inside its infrastructure and must only be used by QVD, so the services that start in QVD do not interfere with other systems in the network.

While there are other cleaner approaches to configure its network, these sometimes have problems with specific types of network interfaces,such as WIFI. The approach mentioned above should work for the majority of systems.

Once the network configuration has been written to file, the network bridge interface should become active.

# ifup qvdnet0

3.3.4. Configure QVD for its network

For QVD to correctly manage the configuration of the virtual machine and the subsequent routing, you will need to change some of the configuration settings inside QVD-DB. It is recommended you use the QVD CLI Administration Utility to do this. You can also use the WAT if you have already configured it.

These settings are used to provide a dedicated network environment for the Virtual Machines. You must use IP addresses and network ranges that do not conflict with its existing network infrastructure. In the following example the range 10.3.15.0/24 is used for the virtual network used by QVD.

# qa4 config set tenant_id=-1,key=vm.network.ip.start,value=10.3.15.50 # qa4 config set tenant_id=-1,key=vm.network.netmask,value=24 # qa4 config set tenant_id=-1,key=vm.network.gateway,value=10.3.15.1 # qa4 config set tenant_id=-1,key=vm.network.dns_server,value=10.3.15.254 # qa4 config set tenant_id=-1,key=vm.network.bridge,value=qvdnet0

|

|

If AppArmor is being executed in your host machine, you can check to it does not allow the host machines to access the Internet. We have a profile of AppArmor for QVD available in the packages. In any case, it is also possible to disable AppArmor with /etc/init.d/apparmor teardown. This will stop AppArmor and will let QVD run normally. If this is unacceptable in the production environment, use the profile mentioned and ask the QVD support team for help if necessary. |

These settings are described in more detail in the section of the QVD Administration manual titled Virtual Machine Options in the chapter Basic configuration of QVD.

This can quickly be done like this:

3.4. Configuration of SSL

The QVD server needs an X509 certificate and a private key to protect the network connections. For an installation in production you must use a certificate issued by a trusted certification authority, like Verisign or Thawte. For the tests you can use a self-signed certificate. We provide instructions about the creation of self-signed certificates in the QVD Installation Guide. If you have a certificate signed by a third party, you can register it with QVD using the QVD CLI administration utility:

# qa config ssl key=/path/to/private/key.pem,cert=/path/to/server/certificate.pem

4. The API of QVD

In version 4 of QVD, a REST interface has been created that lets you control the solution. The interface has been created to facilitate the integration of the product with third parties, although on an internal level, either the WAT or the command lines have been redesigned to make a use of it.

The API is therefore now the standard control of the solution, and has its own documentation. This documentation is not necessary to administrate the platform, and is only of interest for integrators and programmers.

The starting and stopping commands are:

# /etc/init.d/qvd-api start # /etc/init.d/qvd-api stop

The log is in:

/var/log/qvd/qvd-api.log

5. Web administration tool of QVD

In version 4 of QVD, the web administration tool (WAT) has exponentially grown in capabilities and functionality. So much so, that from this version on, WAT has its own administration manual. Please read the manual to obtain information about the same.

Take into account that such a manual is complementary to this one, and probably you have to read this document before completely understanding the one of WAT.

|

|

as noted above, the WAT depends on the API, and cannot work without it. |

6. CLI Administration utility of QVD

|

|

from the version 4.0, QVD has a new administration tool: qa4. This tool has a different syntax fromthe one before and it is documented here. The previous tool has been maintained for reasons of backward-compatibility and it is documented in the annex Administration utility Legacy CLI de QVD. |

The QVD command line administration utility is a perl script that can interact with the QVD-DB via the API (from version 4 of QVD) to perform a wide range of operations inside the QVD infrastructure. It can be used as an alternative to the QVD web administration tool (QVD-WAT) and can be installed in any system with access to the API.

6.1. Installation and configuration of the QVD CLI administration utility

In any of the systems in which you are going to install the QVD CLI administration utility, you will have to add the QVD repository to the apt fonts.

Firstly, add the public key of the QVD parcels to your trusted keys (like root):

# wget -qO - https://www.theqvd.com/packages/key/public.key | sudo apt-key add -

Now, add the repository:

/etc/apt/sources.list.d/qvd.list # apt-get update

For commercial packages:

# echo "deb http://$USER:$PASSWORD@theqvd.com/commercial-packages/ubuntu/xenial QVD-4.1.0 main" > \ /etc/apt/sources.list.d/qvd.list # apt-get update

|

|

$USER and $PASSWORD are the credentials received when the suscription is purchased. |

To install the QVD CLI Administration utility, execute the following command:

# apt-get install perl-qvd-admin4

For SLES:

Firstly, add the public key of the QVD packages to your trusted keys (as root):

# rpm --import https://www.theqvd.com/packages/key/public.key

Now, add the repository:

# zypper ar http://theqvd.com/packages/sles/12SP1/QVD-4.1.0 QVD # zypper ref

For commercial packages:

# zypper ar http://theqvd.com/commercial-packages/sles/12SP1/QVD-4.1.0 QVD User Name: $USER Password: $PASSWORD # zypper ref

|

|

$USER and $PASSWORD are the credentials received when the suscription is purchased. |

To install the QVD CLI Administration utility, execute the following command:

# zypper install perl-QVD-Admin4

For Centos:

Firstly, add the public key of the QVD packages to your trusted keys (as root):

# rpm --import https://www.theqvd.com/packages/key/public.key

Now, add the repository:

# yum-config-manager --add-repo http://theqvd.com/packages/centos/7.2/QVD-4.1.0/

For commercial packages:

# echo "[QVD-4.1.0]" > /etc/yum.repos.d/QVD-4.1.0.repo # echo "name=QVD-4.1.0" >> /etc/yum.repos.d/QVD-4.1.0.repo # echo "baseurl=http://$USER:$PASSWORD@theqvd.com/commercial-packages/centos/7.2/QVD-4.1.0/" | sed 's/@\(.*@\)/%40\1/' >> /etc/yum.repos.d/QVD-4.1.0.repo # echo "enabled=1" >> /etc/yum.repos.d/QVD-4.1.0.repo

|

|

$USER and $PASSWORD are the credentials received when the suscription is purchased. |

To install the QVD CLI Administration Utility, run the following command:

# yum install perl-QVD-Admin4

The QVD CLI administration utility requires access to the QVD API. You must make sure that the file qa.conf is correctly configured, as it is detailed in: Basic Configuration of QVD.

6.2. List of QVD CLI Commands

The QVD CLI administration utility provides a wide range of administration functions that can be used to control components and elements that are involved in the QVD environment.

You can obtain a complete list of these functions specifying the parameter usage:

# qa4 usage

==============================================================================

AVAILABLE COMMANDS

==============================================================================

For a specific explanation of the following commands run:

usage <COMMAND>

i.e. usage login

== CLI GENERAL MANAGEMENT COMMANDS

usage (retrieves instructions about the usage of the app)

login (Intended to log in as a QVD administrator)

logout (Intended to log out)

password (Intended to change current QVD administrator password)

block (Intended to change current QVD administrator pagination block)

version (Retrieves information about the QVD version the app is connected to)

log (retrieves log entries about the QVD server activity)

== QVD OBJECTS COMMANDS

vm (Intended to QVD virtual machines management)

user (Intended to QVD users management)

host (Intended to QVD hosts management)

osf (Intended to QVD OSFs management)

di (Intended to QVD disk images management)

tenant (Intended to QVD tenants management)

role (Intended to QVD roles management)

acl (Intended to QVD acls management)

admin (Intended to QVD administrators management)

config (Intended to QVD configuration management)

Any of the commands presented above can be invoked specifying usage to obtain a more detailed description of the syntax.

For example:

# qa4 usage vm

======================================================================================================

VM COMMAND USAGE

======================================================================================================

== CREATING A NEW VM

vm new <ARGUMENTS>

For example:

vm new name=myvm, user=myuser, osf=myosf (Creates a VM with user 'myuser', osf 'myosf' and name 'myvm')

== GETTING VMs

vm get

vm <FILTERS> get

vm <FILTERS> get <FIELDS TO RETRIEVE>

For example:

vm get (retrieves default fields of all VMs)

vm name=myvm get (retrieves default fields of all VMs with name 'myvm')

vm name=myvm get name, id, ip (retrieves 'name', 'id' and 'ip' of VMs with name 'myvm')

Ordering:

vm ... order <ORDER CRITERIA>

vm ... order <ORDER DIRECTION> <ORDER CRITERIA>

For example:

vm get order name (Ordering by 'name' in default ascendant order)

vm get order asc name, id (Ordering by 'name' and 'id' in ascendant order)

vm get order desc name, id (Ordering by 'name' and 'id' in descendent order)

== UPDATING VMs

vm set <ARGUMENTS>

vm <FILTERS> set <ARGUMENTS>

For example:

vm set di_tag=default (Sets new value for di_tag in all VMs)

vm name=myvm set name=yourvm, di_tag=default (Sets new values for name and di_tag in VM with name myvm)

Adding custom properties:

vm <FILTERS> set property key=value

vm <FILTERS> set property key=value, key=value, ...

For example:

vm set property mykey=myvalue (Sets property mykey in all VMs)

vm name=myvm set property mykey=myvalue, yourkey=yourvalue (Sets properties mykey and yourkey in VM with name myvm)

Deleting custom properties:

vm <FILTERS> del property key

vm <FILTERS> del property key, key, ...

For example:

vm del property mykey (Deletes property mykey in all VMs)

vm name=myvm del property mykey, yourkey (Deletes properties mykey and yourkey in VM with name myvm)

Blocking/Unblocking VMs

vm <FILTERS> block

vm <FILTERS> unblock

For example:

vm block (Blocks all VMs)

vm name=myvm block (Blocks VM with name myvm)

== REMOVING VMs

vm del

vm <FILTERS> del

For example:

vm del (Removes all VMs)

vm name=myvm del (Removes VM with name myvm)

== EXECUTING VMs

vm <FILTERS> start

vm <FILTERS> stop

vm <FILTERS> disconnect

For example:

vm start (Starts all VMs)

vm name=myvm stop (Stop VM with name myvm)

======================================================================================================

DEFAULT FILTERS

======================================================================================================

The filter key 'name', and the operator '=' are considered as defaults. So the following is a right

syntax:

<QVD OBJECT COMMAND> <QVD OBJECT NAME> <ACTION COMMAND>

For example:

vm myVM get (Equal to vm name=myVM get)

vm myVM set name=yourVM (Equal to vm name=myVM set name=yourVM)

======================================================================================================

COMPLEX FILTERS

======================================================================================================

A filter is a key/value pair. Key and value can be related by means of different kinds of

IDENTITY OPERATORS (=, >, <, etc.). Different operators allow different kinds of values

(numeric, alphanumeric, arrays, ranges...). Moreover, two or more filters can be joined by means

of LOGICAL OPERATORS (coordination, disjunction and negation operators).

DIFFERENT IDENTITY OPERATORS:

Supported operators:

= (equal)

!= (not equal)

< (less than)

> (greater that)

<= (less or equal than)

>= (greater or equal than)

~ (matches with a common expression: the SQL LIKE operator)

For example:

key1 = 1,

key1 < 3,

key1 > 3,

key1 <= 3,

key1 >= 3

key1 = [1,2,3] (key1 must be in (1, 2, 3))

key1 = [1:3] (key1 must be between 1 and 3)

key1 = This_is_a_chain

key1 = 'This is a chain' (A value with blanks must be quoted)

key1 = "This is a chain" (A value with blanks must be quoted)

key1 ~ %s_is_a_ch% (key1 must match the SQL commodins expression %s_is_a_ch%)

key1 ~ '%s is a ch%' (key1 must match the SQL commodins expression %s_is_a_ch%)

key1 ~ "%s is a ch%" (key1 must match the SQL commodins expression %s_is_a_ch%)

LOGICAL OPERATORS

Supported operators

, (the AND operator)

; (the OR operator)

! (the NOT operator)

(These operators have left precedence. In order to override this behaviour you must

group filters with '(' and ')')

For example:

key1=value, key2=value, key3=value (key1 AND key2 AND key3)

(key1=value; key2=value), key3=value ((key1 OR key2) AND key3))

!key1=value (This expression means: NOT key1)

!key1=value, key2=value, key3=value (NOT ( key1 AND key2 AND key3))

(! key1=value), key2=value, key3=value ((NOT key1) AND key2 AND key3))

======================================================================================================

6.3. Use of filters to control operations

Many of the available operations through the QVD CLI administration utility also allow filters to limit an action to an element or a particular entity. The filters are essentially coincidences with the elements inside the columns of the table, just as a standard SQL query would provide with the WHERE clause. The filters accept comparisons, wildcards and logical operators.

If, for example, we would like to obtain machines with an id greater than 12000 belonging to the tenant qvd_test:

# qa4 vm "id>12000,tenant=qvd_test" get .-------+----------+------+---------+-----------+------+---------------------------------------+-------------+-----------+-----------+---------+--------------. | id | tenant | name | blocked | user | host | di | ip | ip_in_use | di_in_use | state | user_state | +-------+----------+------+---------+-----------+------+---------------------------------------+-------------+-----------+-----------+---------+--------------+ | 12604 | qvd_test | Java | 0 | test_user | | 14000-Desktop_Development-Java.tar.gz | 10.10.53.79 | | | stopped | disconnected | '-------+----------+------+---------+-----------+------+---------------------------------------+-------------+-----------+-----------+---------+--------------' Total: 1

When the CLI administration utility is used to see the state of the VM, it is common to use filters to see virtual machines in a specific state or belonging to a particular user or where a virtual machine has a defined user_state.

The following example will show you how to chain together a series of filters to see all the virtual machines that belong to users with usernames that start with qvd, where also the virtual machine is stopped and the user is disconnected:

# qa4 vm "user~qvd%,state=stopped,user_state=disconnected" get .-------+---------+------------------+---------+----------+------+-------------------------------+--------------+-----------+-----------+---------+--------------. | id | tenant | name | blocked | user | host | di | ip | ip_in_use | di_in_use | state | user_state | +-------+---------+------------------+---------+----------+------+-------------------------------+--------------+-----------+-----------+---------+--------------+ | 10800 | qvd_43 | Desktop_Standard | 0 | qvd-test | | 10000-Desktop_Standard.tar.gz | 10.13.25.100 | | | stopped | disconnected | | 10900 | qvd_75 | Desktop_Standard | 0 | qvd-test | | 10000-Desktop_Standard.tar.gz | 10.13.25.180 | | | stopped | disconnected | | 10500 | qvd_264 | Desktop_Standard | 0 | qvd-test | | 10000-Desktop_Standard.tar.gz | 10.13.23.120 | | | stopped | disconnected | | 10100 | qvd_115 | Desktop_Standard | 0 | qvd-test | | 10000-Desktop_Standard.tar.gz | 10.13.24.220 | | | stopped | disconnected | | 10200 | qvd_155 | Desktop_Standard | 0 | qvd-test | | 10000-Desktop_Standard.tar.gz | 10.13.24.160 | | | stopped | disconnected | | 10102 | qvd_77 | Desktop_Standard | 0 | qvd-test | | 10000-Desktop_Standard.tar.gz | 10.13.25.30 | | | stopped | disconnected | '-------+---------+------------------+---------+----------+------+-------------------------------+--------------+-----------+-----------+---------+--------------' Total: 6

6.4. Basic administrative operations

In this section we will examine some of the most common administrative tasks for which the QVD CLI administration utility is used.

6.4.1. Change the QVD configuration settings

QVD has a wide range of very specific configuration settings that control various components inside the infrastructure. We discuss some of them here.

To change a QVD configuration parameter of via the QVD CLIadministration utility, you can do the following:

# qa4 config set tenant_id=-1,key=myproperty,value="this is a value"

It is also possible to obtain all the configuration settings of the database and list them:

# qa4 config get

Lastly, it is possible to return a configuration to its original value:

# qa4 config set tenant_id=-1,key=qvd.prop default

6.4.2. Adding a QVD server node

It is common to use the QVD CLI administration utility to add new QVD server nodes to the QVD database. This can be done quickly from the command line with the following instruction:

# qa4 host new name=myhost, address=10.3.15.1

The elimination of a QVD server node is equally simple:

# qa4 host name=myhost del

6.4.3. Add an OSF

You can easily add an OSF to QVD using the QVD CLI administration utility:

# qa4 osf new name=myOSF

There is only one compulsory value to add an OSF, which is name. If the other parameters are left unspecified, theirdefault values are used instead. These are:

-

* Memory * = 256

-

* Use_overlay * = y

-

* User_storage_size * = undef (without limit for user storage)

You can obtain a list of currently available OSF doing the following:

# qa4 osf get

6.4.4. Adding a DI

Using the QVD CLI administration utility, you can attach a disk image (DI) to any existing OSF within the system. This process can take quite some time, since in addition to updating the database, the real disk image file is copied in the directory storage/images inside the shared storage.

On attaching a DI to a particular OSF, we keep the real disk separate from the image that will be served to the final users. This means that you can make changes in the disk image and simply update the OSF, so when the user connects again the image is automatically updated without the user experiencing any disruption to the service.

# qa4 di new disk_image=test-image.tar.gz, osf=retest

Both disk_image and osf are compulsory to add a DI. When the DI is added, the image specified in the path is copied in the read-only storage area configured to store active DIs (usually /var/lib/qvd/storage/images).

You can obtain a list of currently available images by doing the following:

# qa4 di get

6.4.5. Tagging DIs

The DIs can be tagged with arbitrary chains freely. To tag a DI as default, use the command di tag:

# qa4 di <filtro> tag <tag1>,<tag2>,... # qa4 di disk_image=mydi tag default, head, mytag

You can tag DIs with any chain, not only default or head. This lets you use significant names for the tags, for example "software_bug_fixed", for use inside the field DI Tag of the virtual machines.

Tags are useful, since they let you attach a new version of a disk image to an OSF without affecting anybody that is using the current image or default image for an OSF. This lets you implement a change and migrate specific virtual machines using a different OSF for the new image while you are testing. If the image fails for any reason or it does not meet your requirements, it is easy to go back to the default image and let the users continue working while corrections are made.

6.4.6. Choosing the DI tag that the virtual machines will use

In order to tell a virtual machine that it must use a DI with a specific tag, we edit the VM to change its di_tag field. So if for example we have just finished correcting a software error in a DI and we create the tag "software_bug_fixed", we can use this DI in a VM using the following command:

# qa4 vm set di_tag=default # qa4 vm name=myvm set di_tag=default

The next time the VM myvm is started, it will use the DI with this tag.

6.4.7. Adding and eliminating users

It is common to use the QVD CLI administration utility to quickly add and eliminate users.

# qa4 user new login=peter,password=s3cr3t # qa4 user login=guest3 del

You can also list all the users of QVD by means of the list option:

# qa4 user get

Be aware that, as explained in the Installation guide, it is not advisable to create a user whose username already exists in any disk image.

6.4.8. Resetting a user password

You can change a user’s password via the QVD CLI Administration utility:

# qa4 user name=guest set passwd=newpassword

In the example above, we are changing the password for the user of log in guest. You will be asked to provide a new password.

6.4.9. Adding and eliminating virtual machines

Adding and eliminating virtual machines via the QVD CLI administration utility is easy. You can specify the user and the OSF by ID or by name:

# qa4 vm new name=GuestVM,user_id=1 osf_id=1 # qa4 vm new name=GuestVM,user=peter,osf=myOFS

You can easily eliminate a virtual machine using the following command:

# qa4 vm "name=GuestVM" del

6.4.10. Starting and stopping virtual machines

The QVD CLI administration utility can be used to start and stop virtual machines. Unless specified with a particular filter, the action will start or stop all the virtual machines. This command is usually executed specifying a filter to identify the real virtual machine that you wish to start or stop. Examples:

# qa4 vm "user~guest%" stop # qa4 vm id=1 start

The balancing policy determines the node where the VM will start.

6.4.11. Blocking and unblocking virtual machines

Virtual Machines can be marked as "blocked". When they are in this state, the QVD Client application will not be able to connect to the Virtual Machine. This task can be executed by an administrator to carry out an administration task or can take place when the HKD is unable to correctly manage a virtual machine. The following commands can be used to mark a virtual machine as "blocked" or can be used to unblock a virtual machine that has been set in this state.

# qa4 vm "id=2" block # qa4 vm "name=GuestVM "unblock

Check block and unblock the virtual machine in the manual of the WAT to obtain more information on how to configure this state.

6.4.12. Troubleshooting virtual machines

|

|

In this version of the administration tool, the old methods of access by console or vnc to the virtual machines have been disabled. These methods are no longer in use and are of no interest given the new tools that the WAT provides. |

6.4.13. Configuration of customized properties for a virtual machine

QVD includes the option to configure customized properties for a virtual machine that can be set and recovered via the QVD CLI administration utility. This is useful if you need to write your own behaviours or wish to take advantage of the VMA hooks (See the relevant section about this).

The customized properties are often used when you write your own plugins for the L7R, such as the authentication modules or load balancing.

The customized properties are compatible with the configuration parameters: host, user and vm

To add a customized property, you can use the command propset:

# qa4 user name=myuser set property mykey=myvalue, yourkey=yourvalue

You can obtain the content of all the customized properties that have been set for a configuration parameter using the command:

# qa4 user get property

Finally, you can eliminate a customized property using the command propdel:

# qa4 user name=juan property del beverage

7. QVD GUI Client

The QVD client is available for Microsoft Windows, Linux and Mac OS X platforms The client is available in English and Spanish and by default it will be set to the regional configuration of the system.

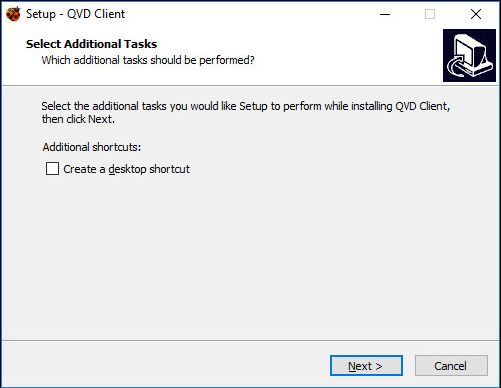

7.1. Installation of the Windows client

You can download the QVD client software installer from:

Once you have finished downloading the installer, run it as a normal executable file and follow the assistant during the installation process.

Once you have finished the installation, you can run the client from the shortcut on the Windows desktop (if you have selected to add the shortcut) or from the QVD menu in the applications menu. This will open the client so that it is ready to connect.

7.2. Installation of the Mac OS X client

You can download the QVD client package from:

The package will install the QVD client in the Applications directory. To launch the client, double click on the ladybird icon or via the spotlight native search system by pressing command + space bar and typing qvd.

7.3. Installation of the client Linux

Installing the QVD client in an Ubuntu Linux platform is an easy procedure.

Firstly, add the public key of the QVD parcels to your trusted keys (like root):

# wget -qO - https://www.theqvd.com/packages/key/public.key | sudo apt-key add -

Now, add the repository:

/etc/apt/sources.list.d/qvd.list # apt-get update

For commercial packages:

# echo "deb http://$USER:$PASSWORD@theqvd.com/commercial-packages/ubuntu/xenial QVD-4.1.0 main" > \ /etc/apt/sources.list.d/qvd.list # apt-get update

|

|

$USER and $PASSWORD are the credentials received when the suscription is purchased. |

Now you can install the client with the following command.

# apt-get install perl-qvd-client

For SLES:

Firstly, add the public key of the QVD packages to your trusted keys (as root):

# rpm --import https://www.theqvd.com/packages/key/public.key

Now, add the repository:

# zypper ar http://theqvd.com/packages/sles/12SP1/QVD-4.1.0 QVD # zypper ref

For commercial packages:

# zypper ar http://theqvd.com/commercial-packages/sles/12SP1/QVD-4.1.0 QVD User Name: $USER Password: $PASSWORD # zypper ref

|

|

$USER and $PASSWORD are the credentials received when the suscription is purchased. |

You can now install the client with the zypper command.

zypper install perl-QVD-Client

For Centos:

Firstly, add the public key of the QVD packages to your trusted keys (as root):

# rpm --import https://www.theqvd.com/packages/key/public.key

Now, add the repository:

# yum-config-manager --add-repo http://theqvd.com/packages/centos/7.2/QVD-4.1.0/

For commercial packages:

# echo "[QVD-4.1.0]" > /etc/yum.repos.d/QVD-4.1.0.repo # echo "name=QVD-4.1.0" >> /etc/yum.repos.d/QVD-4.1.0.repo # echo "baseurl=http://$USER:$PASSWORD@theqvd.com/commercial-packages/centos/7.2/QVD-4.1.0/" | sed 's/@\(.*@\)/%40\1/' >> /etc/yum.repos.d/QVD-4.1.0.repo # echo "enabled=1" >> /etc/yum.repos.d/QVD-4.1.0.repo

|

|

$USER and $PASSWORD are the credentials received when the suscription is purchased. |

You can now install the client with the yum command.

yum install perl-QVD-Client

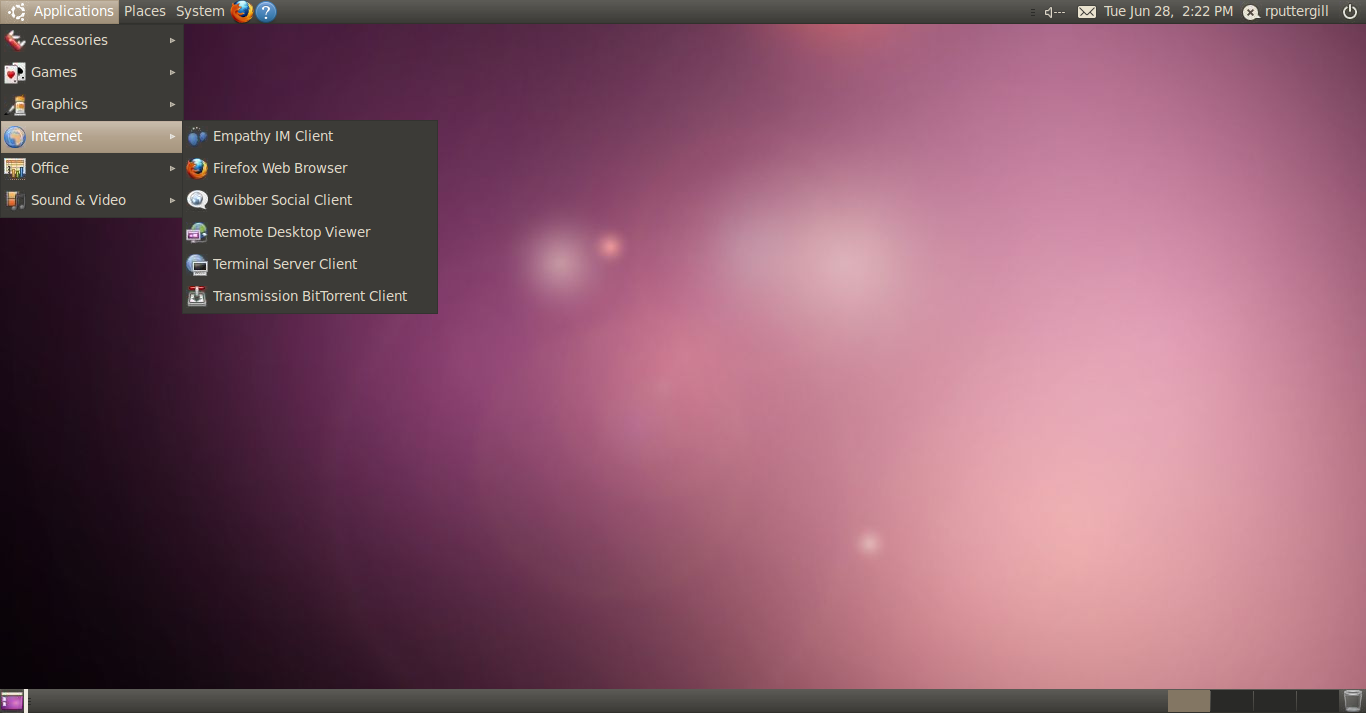

Depending on the desktop environment, you should be able to access the client inside the menu "Applications", usually in the submenu "Internet". Alternatively, you can execute the client GUI from the console using the command qvd-client.

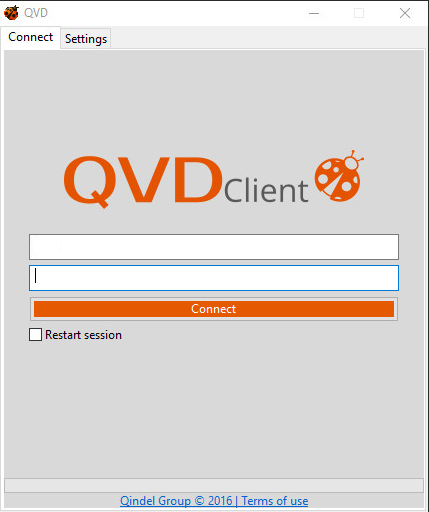

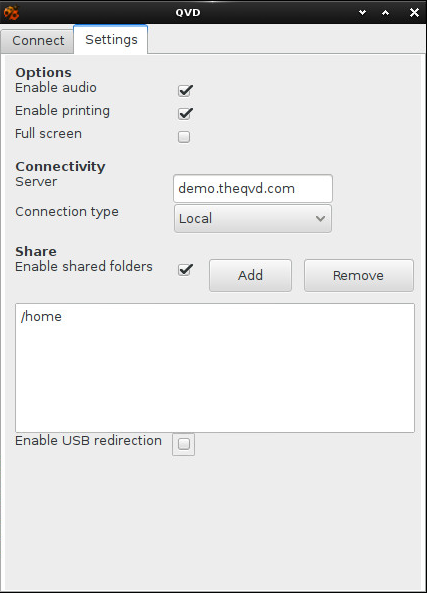

7.4. Connection to your virtual desktop

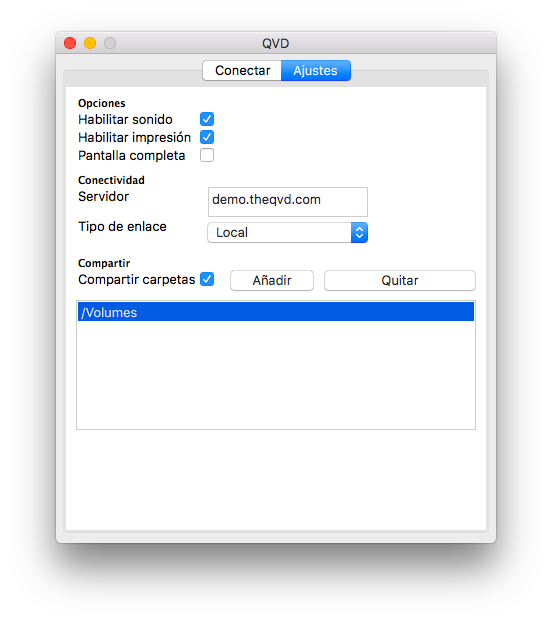

Once you have the GUI client running, you can introduce the User’s Name for the user that you created in QVD, the Password that you configured for the user, the Server hostname or IP address for the QVD server node created, and you can choose the compression level of the connection by selecting Type of connection.

By default, the Type of connection is established in Local. This configuration is suitable for connections through a local area network. There are also options for ADSL that would be suitable for any broadband connection, and for modem which can be used in cases where the bandwidth is severely limited or damaged.

Changing the type of connection will increase the compression used to deliver the virtual desktop through the network connection. It will also increase the amount of cache used by the client to limit the number of screen refreshes necessary.

In general, the use of heavy compression and the storage in cache will still enable users the ability to work comfortably in their virtual desktops. However, the graphic quality will be slightly lower.

Once you have finished introducing the details of your connection, just click on the button labelled Connect and your virtual desktop should load.

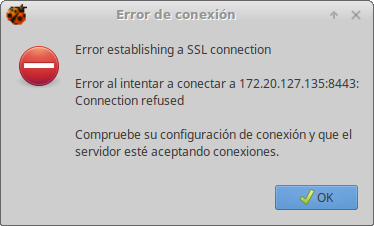

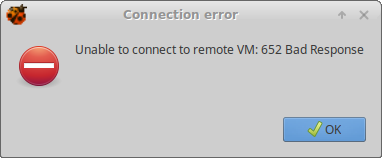

7.4.1. Possible errors in the connection

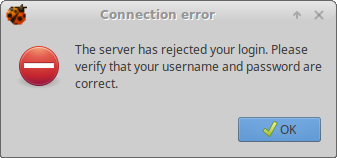

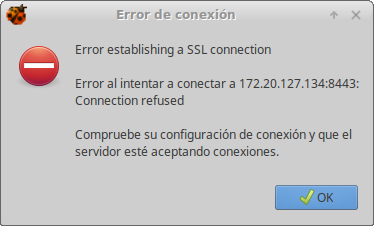

The client has several error messages depending of different outcomes.

-

Login error: The pair user/password is incorrect and the client cannot be stablished.

-

Server Failure: There was a server failure and a connection cannot be stablished. This may happend because the HKD is not running.

-

Connection Failure: Connection configuration is incorrect and a connection cannot be stablished. Please, check the configuration previously set in the client, the server url.

-

Virtual Machine blocked: The virtual machine is blocked and a connection cannot be stablished.

7.5. Shared folder of the QVD client

QVD can provide access to local folders in the user’s client machine from the virtual desktop.

7.5.1. Use of shared folders

To activate this option, select it in the configuration tab.

When the shared folders are activated for the first time, the main user directory is redirected (%USERPROFILE% for Windows clients and /home/%user% for Linux and Mac OS X).

On activating the option, a list of shared folders is shown. From this screen it is possible to eliminate folders in the list and add others.

The folders will appear in the user’s personal directory in a subfolder called redirect. GNOME and other recent Linux desktops will also show a shortcut icon on the desktop and in the file administrator, depending on the configuration.

7.6. Additional configuration for the QVD client

The QVD client offers several configuration options that can be used to adjust the performance and customize the user experience. By default, these configurations are found in the file client.conf. Under Mac OS X and Linux, this file is found in the user’s personal directory in ~/.qvd/client.conf, and in /etc/qvd, although the user’s file replaces it if it exists. In windows,client.conf is found inside %APPDATA%\.qvd\client.conf.

The QVD client GUI also offers convenient access to some of the basic configurations of the user in the tab "Configuration" in Start. The tab can be activated or deactivated in client.conf - see below for more details.

7.6.1. QVD configuration file

You can specify the following additional options to control the client software on a workstation:

client.link = local client.geometry = 1024x768 client.fullscreen = 1 client.slave.enable = 1 client.slave.command "/path/to/custom/slave-command" client.audio.enable = 1 client.printing.enable = 1 client.host.port = 8443 client.host.name = loadbalancer.mydomain.com client.user.name = guest client.use_ssl = 1 client.force.host.name = loadbalancer.mydomain.com client.force.link = local client.remember_password = 0 client.show.remember_password = 0 client.show.settings = 1 client.usb.enable= client.usb.share_list=139a:0608@3210bf331301,0f45:2421 client.file_sharing.enable=1 client.share.0=/home/user/Documents client.share.1=

The values that appear above are the ones set by default:

-

client.link: can be: modem, isdn, adsl, wan, lan, local or a specification of bandwidth (56k, 1m, 100m…)

-

client.geometry: used to inform about the size of the screen of the client. If it is not used, it will become to full screen mode

-

client.fullscreen: full screen mode

-

client.slave.enable: habilitate or not the slave channel, that controls the functions of the shared folder and printing

-

client.slave.command: command to execute for the slave

-

client.audio.enable: habilitate pulse Audio server in the client. The image of the virtual machine of the user must support this option or it will not work

-

client.printing.enable: Habilitate the shared printers in the client. The image of the virtual machine of the user must support this option or it will not work.

-

client.host.port: HKD port to which the client must connect

-

client.host.name: HKD host or load balancer to which the client must connect

-

client.user.name: User’s name for the authentication (in multitenant mode the format would be user@tenant)

-

client.use_ssl: Activate or deactivate the use of SLL in the communication with the QVD server. Activated by default

-

client.force.host.name: Strength in the host name in the client to only get connected to this host (it makes it impossible that the user changes it through the graphical interface)

-

client.force.link: The same as the previous one but for the type of link

-

client.show.remember_password: It controls if the user has the option of saving his password or not (Remember password is showed in the GUI)

-

client.remember_password: It controls if the user’s password is saved or not

-

client.show.settings: Show or hide the tab of configuration

-

client.usb.enable: It habilitates the sharing of USB devices (only in Linux)

-

client.usb.share_list: List of shared devices

-

client.file_sharing.enable: It habilitates the sharing of folders

-